Communicating With Others: Development and Use of Language

Dinesh Ramoo

Human language is the most complex behaviour on the planet and, at least as far as we know, in the universe. Language involves both the ability to comprehend spoken and written words and to create communication in real time when we speak or write. Most languages are oral, generated through speaking. Speaking involves a variety of complex cognitive, social, and biological processes, including operation of the vocal cords and the coordination of breath with movements of the throat, mouth, and tongue.

Other languages are sign languages, in which the communication is expressed by movements of the hands. The most common sign language is American Sign Language (ASL), commonly used in many countries across the world and adapted for use in varying countries. The other main sign language used in Canada is la Langue des Signes Québécoise (LSQ); there is also a regional dialect, Maritime Sign Language (MSL).

Although language is often used for the transmission of information (e.g., “turn right at the next light and then go straight” or “place tab A into slot B”), this is only its most basic function. Language also allows us to access existing knowledge, to draw conclusions, to set and accomplish goals, and to understand and communicate complex social relationships. Language is fundamental to our ability to think; without it we would be much less intelligent than we are.

Language can be conceptualised in terms of sounds, meaning, and the environmental factors that help us understand them. Phonemes are the elementary sounds of our language; morphemes are the smallest units of meaning in a language; syntax is the set of grammatical rules that control how words are put together; and contextual information consists of the elements of communication that are not part of the content of language but that help us understand its meaning.

Psychological Consequences of Language Use

What are the psychological consequences of language use? When people use language to describe an experience, their thoughts and feelings are profoundly shaped by the linguistic representation that they have produced rather than the original experience (Holtgraves & Kashima, 2008). For example, Jamin Halberstadt (2003) showed a picture of a person displaying an ambiguous emotion and examined how people evaluated the displayed emotion. When people verbally explained why the target person was expressing a particular emotion, they tended to remember the person as feeling that emotion more intensely than when they simply labelled the emotion.

Thus, constructing a linguistic representation of another person’s emotion apparently biased the speaker’s memory of that person’s emotion. Furthermore, linguistically labelling one’s own emotional experience appears to alter the speaker’s neural processes. When people linguistically labelled negative images, the amygdala — a brain structure that is critically involved in the processing of negative emotions such as fear — was activated less than when they were not given a chance to label them (Lieberman et al., 2007). Potentially because of these effects of verbalising emotional experiences, linguistic reconstructions of negative life events can have some therapeutic effects on those who suffer from the traumatic experiences (Pennebaker & Seagal, 1999). Sonja Lyubomirsky, Lorie Sousa, and Rene Dickerhoof (2006) found that writing and talking about negative past life events improved people’s psychological well-being, but just thinking about them worsened it. Furthermore, if a certain type of language use (i.e., linguistic practice) is repeated by a large number of people in a community, it can potentially have a significant effect on their thoughts and actions (Holtgraves & Kashima, 2008). This notion is often called Sapir-Whorf hypothesis (Sapir, 1921; Whorf, 1956). For instance, if you are given a description of a man, Steven, as having greater than average experience of the world (e.g., well-traveled, varied job experience), a strong family orientation, and well-developed social skills, how do you describe Steven? Do you think you can remember Steven’s personality five days later? It will probably be difficult, but if you know Chinese and are reading about Steven in Chinese (the original paper does not specify whether this is Mandarin or Cantonese), as Curt Hoffman, Ivy Lau, and David Johnson (1986) showed, the chances are that you can remember him well. This is because English does not have a word to describe this kind of personality, whereas Chinese does: shì gù. This way, the language you use can influence your cognition. In its strong form, it has been argued that language determines thought, but this is probably wrong. Language does not completely determine our thoughts — our thoughts are far too flexible for that — but habitual uses of language can influence our habit of thought and action. For instance, some linguistic practice seems to be associated even with cultural values and social institutions, like dropping pronouns. Pronouns such as “I” and “you” are used to represent the speaker and listener of a speech in English. In an English sentence, these pronouns cannot be dropped if they are used as the subject of a sentence. So, for instance, “I went to the movie last night” is fine, but “Went to the movie last night” is not in standard English. However, in other languages such as Japanese, pronouns can be, and in fact often are, dropped from sentences. It turns out that people living in those countries where pronoun drop languages are spoken tend to have more collectivistic values (e.g., employees having greater loyalty toward their employers) than those who use non–pronoun drop languages such as English (Kashima & Kashima, 1998). It was argued that the explicit reference to “you” and “I” may remind speakers of the distinction between the self and other, and it may remind speakers of the differentiation between individuals. Such a linguistic practice may act as a constant reminder of the cultural value, which, in turn, may encourage people to perform the linguistic practice.

An example of evidence for Sapir-Whorf hypothesis comes from a comparison between English and Mandarin speakers (Boroditsky, 2000). In English, time is often metaphorically described in horizontal terms. For instance, good times are ahead of us, or hardship can be left behind us. We can move a meeting forward or backward. Mandarin speakers use similar horizontal metaphors too, but vertical metaphors are also used. So, for instance, the last month is called shàng gè yuè or “above month,” and the next month, xià gè yuè or “below month.” To put it differently, the arrow of time flies horizontally in English, but it can fly both horizontally and vertically in Chinese. Does this difference in language use affect English and Chinese speakers’ comprehension of language?

This is what Boroditsky (2000) found. First, English and Mandarin speakers’ understanding of sentences that use a horizontal positioning (e.g., June comes before August) did not differ much. When they were first presented with a picture that implies a horizontal positioning (e.g., the black worm is ahead of the white worm), they could read and understand them faster than when they were presented with a picture that implies a vertical positioning (e.g., the black ball is above the white ball). This implies that thinking about the horizontal positioning, when described as ahead or behind, equally primed (i.e., reminded) both English and Chinese speakers of the horizontal metaphor used in the sentence about time. However, English and Chinese speakers’ comprehension differed for statements that do not use a spatial metaphor (e.g., August is later than June). When primed with the vertical spatial positioning, Chinese speakers comprehended these statements faster, but English speakers more slowly, than when they were primed with the horizontal spatial positioning. Apparently, English speakers were not used to thinking about months in terms of the vertical line, when described as above or below. Indeed, when they were trained to do so, their comprehension was similar to Chinese speakers (Boroditsky, Fuhrman, & McCormick, 2010).

The idea that language and its structures influence and limit human thought is called linguistic relativity. The most frequently cited example of this possibility was proposed by Benjamin Whorf (1897–1941), a linguist who was particularly interested in Aboriginal languages. Whorf argued that the Inuit people of Canada had many words for snow, whereas English speakers have only one, and that this difference influenced how the different cultures perceived snow. Whorf argued that the Inuit perceived and categorised snow in finer details than English speakers possibly because the English language constrained perception. Although the idea of linguistic relativism seemed reasonable, research has suggested that language has less influence on thinking than might be expected. For one, in terms of perceptions of snow, although it is true that the Inuit do make more distinctions among types of snow than English speakers do, the latter also make some distinctions (e.g., think of words like powder, slush, whiteout, and so forth). It is also possible that thinking about snow may influence language, rather than the other way around.

In a more direct test of the possibility that language influences thinking, Eleanor Rosch (1973) compared people from the Dani culture of New Guinea, who have only two terms for colour, dark and bright, with English speakers who use many more terms. Rosch hypothesised that if language constrains perception and categorisation, then the Dani should have a harder time distinguishing colours than English speakers would. However, Rosch’s research found that when the Dani were asked to categorise colours using new categories, they did so in almost the same way that English speakers did. Similar results were found by Michael Frank, Daniel Everett, Evelina Fedorenko, and Edward Gibson (2008), who showed that the Amazonian tribe known as the Pirahã, who have no linguistic method for expressing exact quantities, not even the number one, were nevertheless able to perform matches with large numbers without problem.

Although these data led researchers to conclude that the language we use to describe colour and number does not influence our understanding of the underlying sensation, another more recent study has questioned this assumption. Debi Roberson, Ian Davies, and Jules Davidoff (2000) conducted another study with Dani participants and found that, at least for some colours, the names that they used to describe colours did influence their perceptions of the colours. Other researchers continue to test the possibility that our language influences our perceptions, and perhaps even our thoughts (Levinson, 1998), and yet the evidence for this possibility is, as of now, mixed.

Development of Language

Psychology in Everyday Life: The Case of Genie

In the fall of 1970, a social worker in the Los Angeles area found a 13-year-old girl who was being raised in extremely neglectful and abusive conditions. The girl, who came to be known as Genie, had lived most of her life tied to a potty chair or confined to a crib in a small room that was kept closed with the curtains drawn. For a little over a decade, Genie had virtually no social interaction and no access to the outside world. As a result of these conditions, Genie was unable to stand up, chew solid food, or speak (Fromkin, Krashen, Curtiss, Rigler, & Rigler, 1974; Rymer, 1993). The police took Genie into protective custody.

Genie’s abilities improved dramatically following her removal from her abusive environment, and early on, it appeared she was acquiring language — much later than would be predicted by critical period hypotheses that had been posited at the time (Fromkin et al., 1974). Genie managed to amass an impressive vocabulary in a relatively short amount of time. However, she never mastered the grammatical aspects of language (Curtiss, 1981). Perhaps being deprived of the opportunity to learn language during a critical period impeded Genie’s ability to fully acquire and use language. Genie’s case, while not conclusive, suggests that early language input is needed for language learning. This is also why it is important to determine quickly if a child is deaf and to begin immediately to communicate in sign language in order to maximise the chances of fluency (Mayberry, Lock, & Kazmi, 2002).

All children with typical brains who are exposed to language will develop it seemingly effortlessly. They do not need to be taught explicitly how to conjugate verbs, they do not need to memorise vocabulary lists, and they will easily pick up any accent or dialect that they are exposed to. Indeed, children seem to learn to use language much more easily than adults do. You may recall that each language has its own set of phonemes that are used to generate morphemes, words, and so on. Babies can discriminate among the sounds that make up a language (e.g., they can tell the difference between the “s” in vision and the “ss” in fission), and they can differentiate between the sounds of all human languages, even those that do not occur in the languages that are used in their environments. However, by the time that they are about one year old, they can only discriminate among those phonemes that are used in the language or languages in their environments (Jensen, 2011; Werker & Lalonde, 1988; Werker & Tees, 2002).

Learning Language

Language learning begins even before birth because the fetus can hear muffled versions of speaking from outside the womb. Christine Moon, Robin Cooper, and William Fifer (1993) found that infants only two days old sucked harder on a pacifier when they heard their mothers’ native language being spoken — even when strangers were speaking the languages — than when they heard a foreign language. Babies are also aware of the patterns of their native language, showing surprise when they hear speech that has different patterns of phonemes than those they are used to (Saffran, Aslin, & Newport, 2004).

During the first year or so after birth, long before they speak their first words, infants are already learning language. One aspect of this learning is practice in producing speech. By the time they are six to eight weeks old, babies start making vowel sounds (e.g., ooohh, aaahh, goo) as well as a variety of cries and squeals to help them practice.

At about seven months, infants begin babbling, which is to say they are engaging in intentional vocalisations that lack specific meaning. Children babble as practice in creating specific sounds, and by the time they are one year old, the babbling uses primarily the sounds of the language that they are learning (de Boysson-Bardies, Sagart, & Durand, 1984). These vocalisations have a conversational tone that sounds meaningful even though it is not. Babbling also helps children understand the social, communicative function of language (Figure IL.19). Children who are exposed to sign language babble in sign by making hand movements that represent real language (Petitto & Marentette, 1991).

At the same time that infants are practicing their speaking skills by babbling, they are also learning to better understand sounds and eventually the words of language. One of the first words that children understand is their own name, usually by about six months, followed by commonly used words like “bottle,” “mama,” and “doggie” by 10 to 12 months (Mandel, Jusczyk, & Pisoni, 1995).

The infant usually produces their first words at about one year of age. It is at this point that the child first understands that words are more than sounds — they refer to particular objects and ideas. By the time children are two years old, they have a vocabulary of several hundred words, and by kindergarten their vocabularies have increased to several thousand words. By Grade 5, most children know about 50,000 words; by the time they are in university, most know about 200,000. This may vary for people who don’t attend university or complete higher education.

The early utterances of children contain many errors, for instance, confusing /b/ and /d/, or /c/ and /z/, and the words that children create are often simplified, in part because they are not yet able to make the more complex sounds of the real language (Dobrich & Scarborough, 1992). Children may say “keekee” for kitty, “nana” for banana, and “vesketti” for spaghetti in part because it is easier. Often these early words are accompanied by gestures that may also be easier to produce than the words themselves. Children’s pronunciations become increasingly accurate between one and three years, but some problems may persist until school age.

Most of a child’s first words are nouns, and early sentences may include only the noun. “Ma” may mean “more milk please,” and “da” may mean “look, there’s Fido.” Eventually the length of the utterances increases to two words (e.g., “mo ma” or “da bark”), and these primitive sentences begin to follow the appropriate syntax of the native language.

Because language involves the active categorisation of sounds and words into higher level units, children make some mistakes in interpreting what words mean and how to use them. In particular, they often make overextensions of concepts, which means they use a given word in a broader context than appropriate. For example, a child might at first call all adult men “daddy” or all animals “doggie.”

Children also use contextual information, particularly the cues that parents provide, to help them learn language. Infants are frequently more attuned to the tone of voice of the person speaking than to the content of the words themselves and are aware of the target of speech. Janet Werker, Judith Pegg, and Peter McLeod (1994) found that infants listened longer to a woman who was speaking to a baby than to a woman who was speaking to another adult.

Children learn that people are usually referring to things that they are looking at when they are speaking (Baldwin, 1993) and that the speaker’s emotional expressions are related to the content of their speech. Children also use their knowledge of syntax to help them figure out what words mean. If a child sees an adult point to a strange object and hears them say, “this is a dirb,” they will infer that a “dirb” is a thing, but if they hear them say, “this is a one of those dirb things,” they will infer that it refers to the colour or another characteristic of the object. Additionally, if they hear the word “dirbing,” they will infer that “dirbing” is something that we do (Waxman, 1990).

How Children Learn Language: Theories of Language Acquisition

Psychological theories of language learning differ in terms of the importance they place on nature versus nurture, yet it is clear that both matter. Children are not born knowing language; they learn to speak by hearing what happens around them. Human brains, unlike those of any other animal, are wired in a way that leads them, almost effortlessly, to learn language.

Perhaps the most straightforward explanation of language development is that it occurs through principles of learning, including association, reinforcement, and the observation of others (Skinner, 1965). There must be at least some truth to the idea that language is learned because children learn the language that they hear spoken around them rather than some other language. Also supporting this idea is the gradual improvement of language skills with time. It seems that children modify their language through imitation, reinforcement and shaping, as would be predicted by learning theories.

However, language cannot be entirely learned. For one, children learn words too fast for them to be learned through reinforcement. Between the ages of 18 months and five years, children learn up to 10 new words every day (Anglin, 1993). More importantly, language is more generative than it is imitative. Generativity refers to the fact that speakers of a language can compose sentences to represent new ideas that they have never before been exposed to. Language is not a predefined set of ideas and sentences that we choose when we need them, but rather a system of rules and procedures that allows us to create an infinite number of statements, thoughts, and ideas, including those that have never previously occurred. When a child says that they “swimmed” in the pool, for instance, they are showing generativity. No native speaker of English would ever say “swimmed,” yet it is easily generated from the normal system of producing language.

Other evidence that refutes the idea that all language is learned through experience comes from the observation that children may learn languages better than they ever hear them. Deaf children whose parents do not use sign language very well nevertheless are able to learn it perfectly on their own, and they may even make up their own language if they need to (Goldin-Meadow & Mylander, 1998). A group of deaf children in a school in Nicaragua, whose teachers could not sign, invented a way to communicate through made-up signs (Senghas, Senghas, & Pyers, 2005). The development of this new Nicaraguan Sign Language has continued and changed as new generations of students have come to the school and started using the language. Although the original system was not a real language (i.e., it was created from scratch and did not descend from our ancestral past), it is becoming closer and closer every year, showing the development of a new language in modern times.

The linguist Noam Chomsky is a believer in the nature approach to language, arguing that human brains contain a language acquisition device that includes a universal grammar that underlies all human language (Chomsky, 1965, 1972). According to this approach, each of the many languages spoken around the world — there are between 6,000 and 8,000 — is an individual example of the same underlying set of procedures that are hardwired into human brains. Chomsky’s account proposes that children are born with a knowledge of general rules of syntax that determine how sentences are constructed and then coordinate with the language the child is exposed to.

Chomsky differentiates between the deep structure of an idea — how the idea is represented in the fundamental universal grammar that is common to all languages — and the surface structure of the idea — how it is expressed in any one language. Once we hear or express a thought in surface structure, we generally forget exactly how it happened. At the end of a lecture, you will remember a lot of the deep structure (i.e., the ideas expressed by the instructor), but you cannot reproduce the surface structure (i.e., the exact words that the instructor used to communicate the ideas).

Although there is general agreement among psychologists that babies are genetically programmed to learn language, there is still debate about Chomsky’s idea that there is a universal grammar that can account for all language learning. Nicholas Evans and Stephen Levinson (2009) surveyed the world’s languages and found that none of the presumed underlying features of the language acquisition device were entirely universal. In their search, they found languages that did not have noun or verb phrases, that did not have tenses (e.g., past, present, future), and even some that did not have nouns or verbs at all, even though a basic assumption of a universal grammar is that all languages should share these features.

Bilingualism and Cognitive Development

Bilingualism, which is the ability to speak two languages, is becoming more and more frequent in the modern world. Nearly one-half of the world’s population, including 17% of Canadian citizens, grows up bilingual.

In Canada, education is under provincial jurisdiction; however, the federal government has been a strong supporter of establishing Canada as a bilingual country and has helped pioneer the French immersion programs in the public education systems throughout the country. In contrast, many US states have passed laws outlawing bilingual education in schools based on the idea that students will have a stronger identity with the school, the culture, and the government if they speak only English. This is, in part, based on the idea that speaking two languages may interfere with cognitive development. There appears to be little evidence for such an assertion. In fact, throughout most of human history, human beings have lived in multilingual societies. In literate societies, it was common for people to use one language for everyday use and another for official or literary purposes (Sanskrit in Ancient India and Southeast Asia, Greek in the Roman empire, Latin in Western Europe, Persian in the Ottoman and Mughal empires, and Classical Arabic in the Islamic World were all used as academic language while other languages were used in day-to-day interactions).

A variety of minority language immersion programs are now offered across the country depending on need and interest. In British Columbia, for instance, the city of Vancouver established a new bilingual Mandarin Chinese-English immersion program in 2002 at the elementary school level in order to accommodate Vancouver’s both historic and present strong ties to the Mandarin-speaking world. Similar programs have been developed for both Hindi and Punjabi to serve the large South Asian cultural community in the city of Surrey. By default, most schools in British Columbia teach in English, with French immersion options available. In both English and French schools, one can study and take government exams in Japanese, Punjabi, Mandarin, French, Spanish, and German at the secondary level.

Some early psychological research showed that, when compared with monolingual children, bilingual children performed more slowly when processing language, and their verbal scores were lower. However, these tests were frequently given in English, even when this was not the child’s first language, and the children tested were often of lower socioeconomic status than the monolingual children (Andrews, 1982).

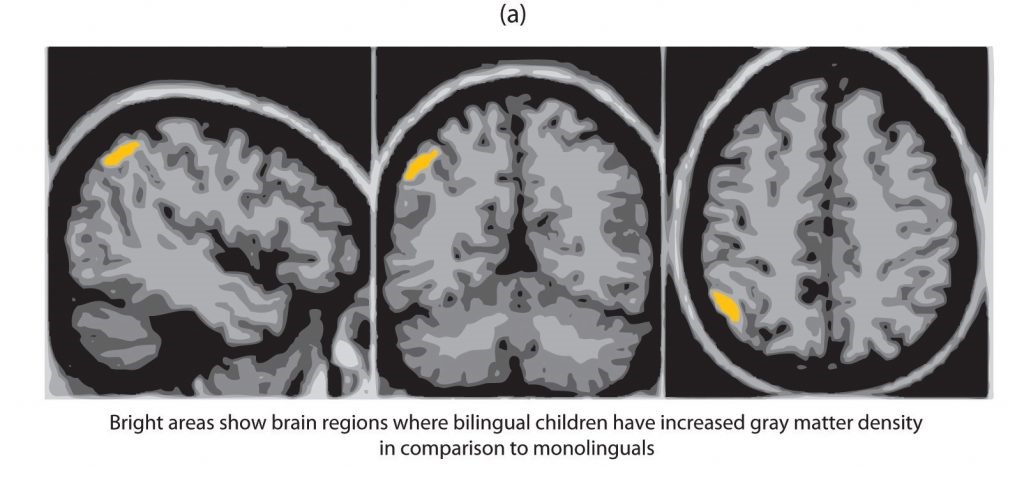

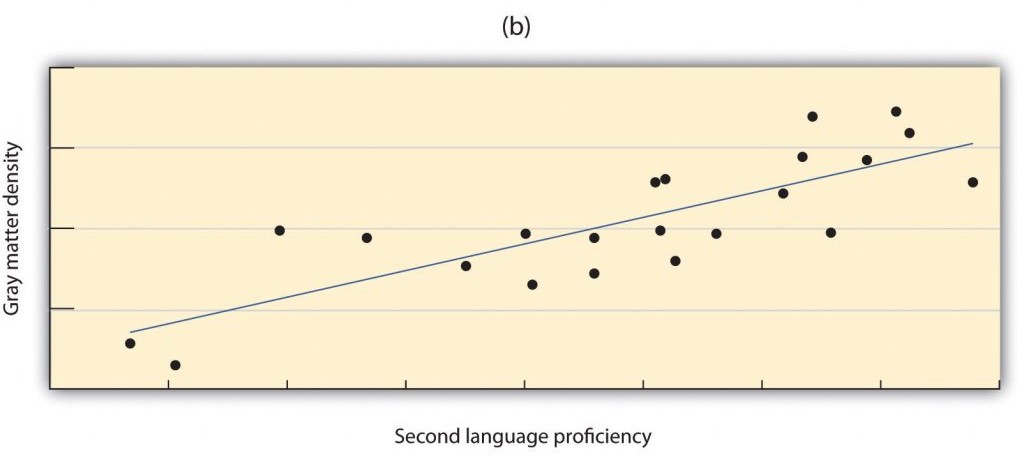

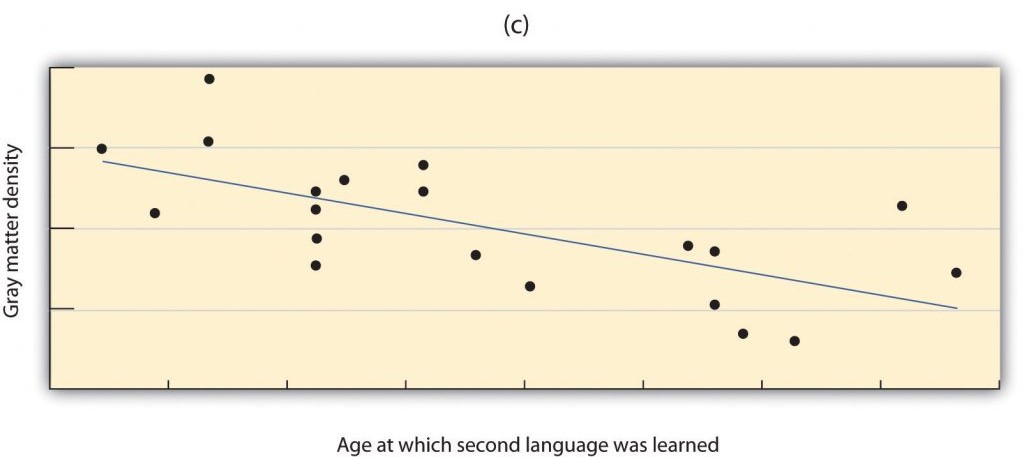

More current research that has controlled for these factors has found that, although bilingual children may, in some cases, learn language somewhat more slowly than do monolingual children (Oller & Pearson, 2002), bilingual and monolingual children do not significantly differ in the final depth of language learning, nor do they generally confuse the two languages (Nicoladis & Genesee, 1997). In fact, participants who speak two languages have been found to have better cognitive functioning, cognitive flexibility, and analytic skills in comparison to monolinguals (Bialystok, 2009). Research has also found that learning a second language produces changes in the area of the brain in the left hemisphere that is involved in language (Figure IL.20), such that this area is denser and contains more neurons (Mechelli et al., 2004). Furthermore, the increased density is stronger in those individuals who are most proficient in their second language and who learned the second language earlier. Thus, rather than slowing language development, learning a second language seems to increase cognitive abilities.

Biology of Language

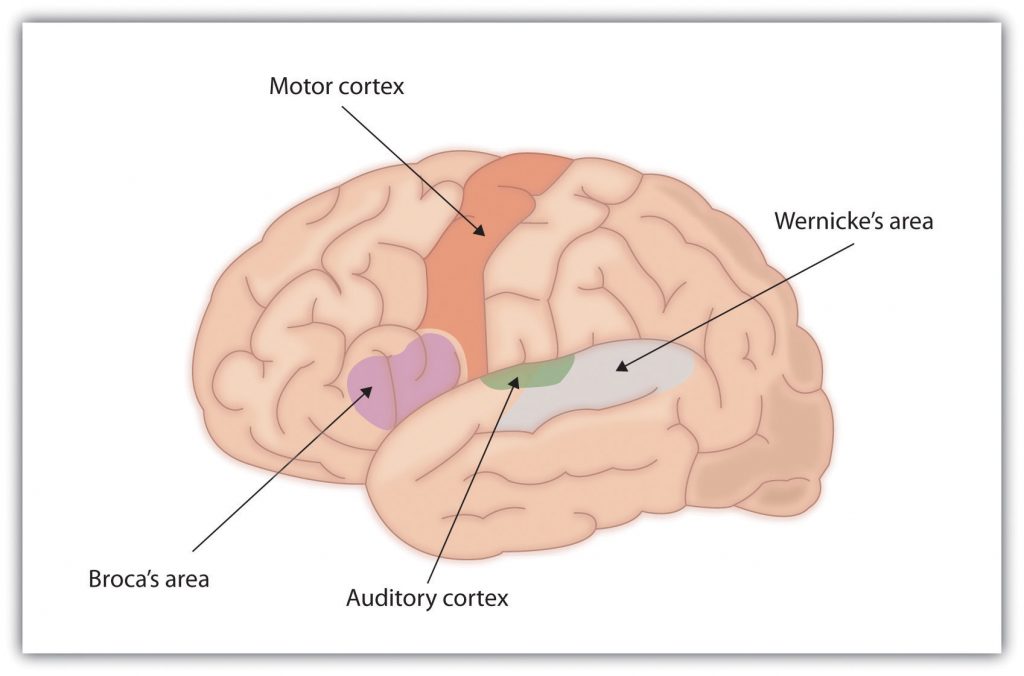

For the 90% of people who are right-handed, language is stored and controlled by the left cerebral cortex, while for some left-handers this pattern is reversed. These differences can easily be seen in the results of neuroimaging studies that show that listening to and producing language creates greater activity in the left hemisphere than in the right. Broca’s area, an area in front of the left hemisphere near the motor cortex, is responsible for language production (Figure IL.21). This area was first localized in the 1860s by the French physician Paul Broca, who studied patients with lesions to various parts of the brain. Wernicke’s area, an area of the brain next to the auditory cortex, is responsible for language comprehension.

Evidence for the importance of Broca’s area and Wernicke’s area in language is seen in patients who experience aphasia, a condition in which language functions are severely impaired. People with Broca’s aphasia have difficulty producing speech, whereas people with damage to Wernicke’s area can produce speech, but what they say makes no sense, and they have trouble understanding language.